Introduction

Every online community faces the same challenge: how do you keep conversations safe and respectful without slowing everyone down? Spam and obvious abuse are easy to block, and clean content should pass through instantly. The hard part is the ones in between: posts that might be harmful, or might be fine depending on context. That’s where moderation becomes difficult.

Using only AI leads to mistakes that can frustrate users. Depending only on humans slows everything down. The best solution is to combine both. In this project, we’ll build a content moderation system that uses OpenAI to analyze text and image URLs, Motia to manage the workflow, and Slack to bring in human reviewers only when needed.

Here’s how it works: when content is submitted, AI gives it a confidence score. Motia automatically approves or rejects the clear cases. Anything uncertain is sent straight to Slack. Reviewers can approve, reject, or escalate with a single click, and Motia takes care of the rest. The result is fast, simple, and keeps human effort focused where judgment really matters.

Why This Architecture

Traditional content moderation spans multiple services: analysis, routing, notifications, webhook handling, and actions, each requiring separate deployments, monitoring, and complex coordination.

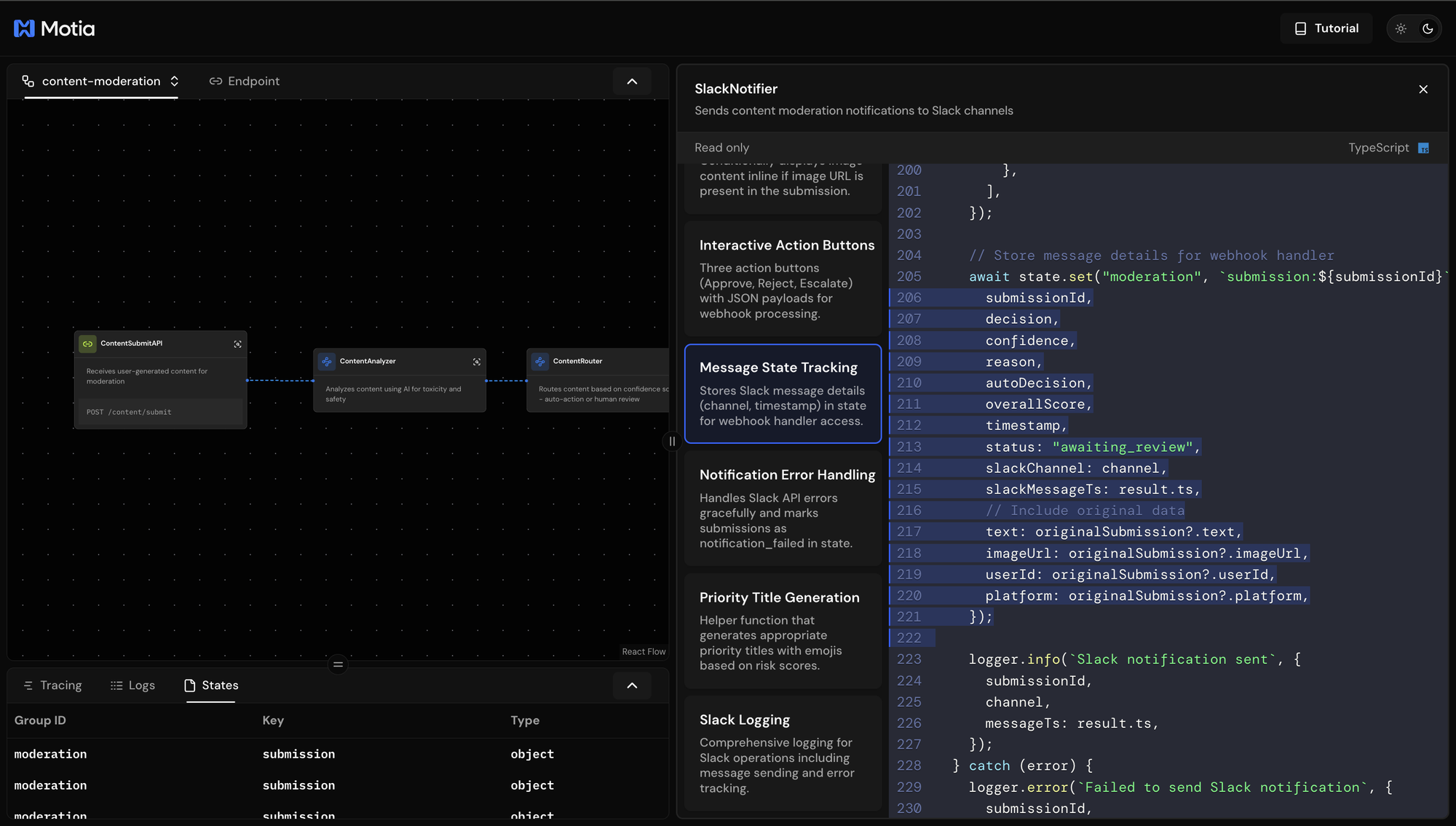

Motia simplifies this by adopting an event-driven design where each step is a focused function that listens for events and emits new ones. The framework automatically manages service orchestration, state persistence, error recovery, and observability. This eliminates the overhead of microservice coordination while preserving modularity and separation of concerns.

Think of Motia as building blocks: adding new features means adding new event-driven steps, not rebuilding infrastructure. This approach enhances development, improves reliability, and makes the system easier to extend and maintain.

Project Overview

Here's how the content moderation system works when someone submits content:

- Content Submission → API endpoint accepts optional text and/or optional image URL, plus required user metadata (userId, platform). At least one content type (text or imageUrl) must be provided.

- AI Analysis → OpenAI analyzes text for toxicity using Moderation API and evaluates images via GPT-4 Vision by fetching from provided URLs. Returns confidence scores for each content type.

- Decision Routing → Content with very low risk (≤5%) gets auto-approved, very high risk (≥95%) gets auto-rejected, and uncertain content (5-95%) routes to human review.

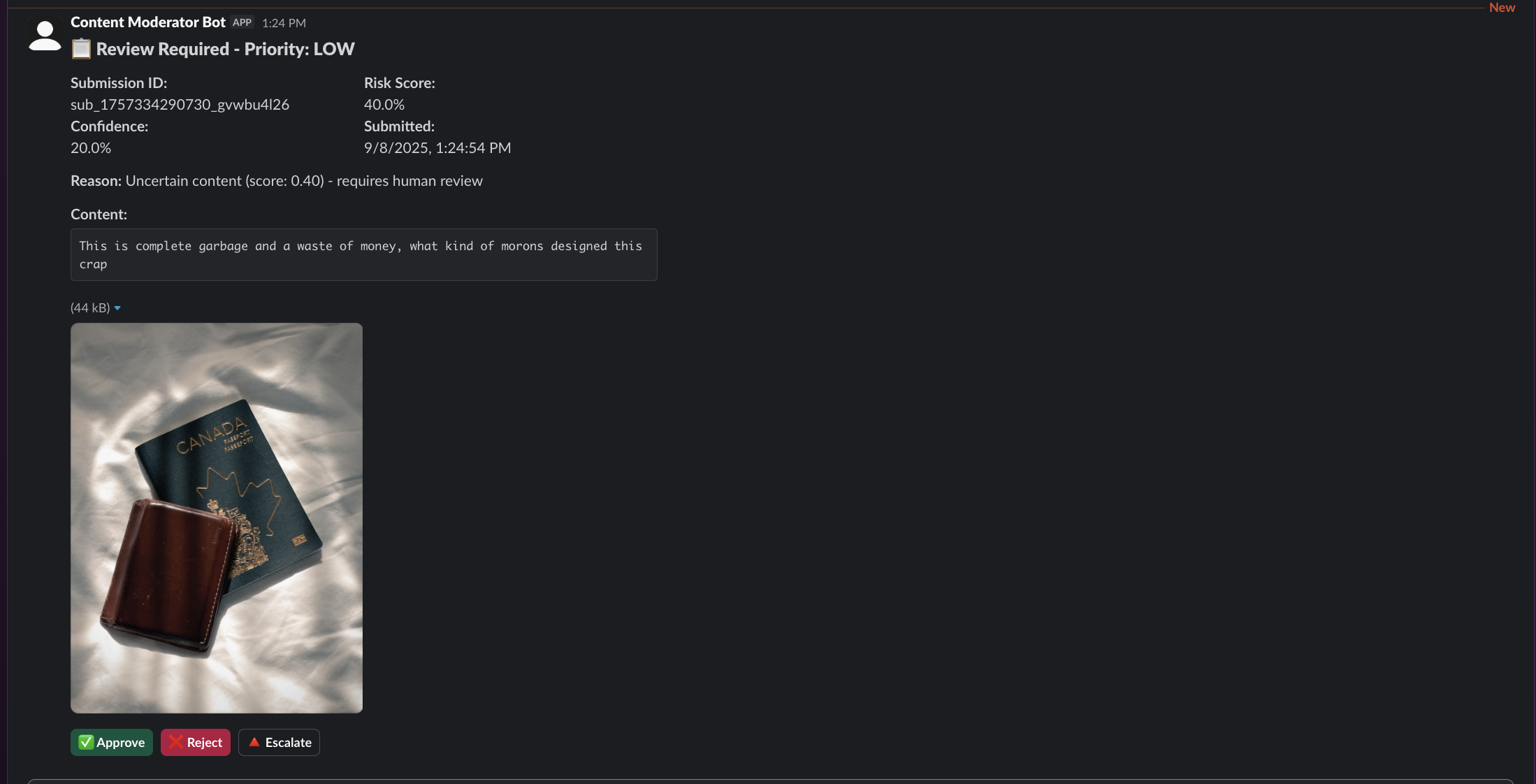

- Slack Notification → Human reviewers receive Slack messages showing the content (text displayed in code blocks, images embedded from URLs) along with AI risk scores and interactive approve/reject/escalate buttons.

- Human Decision → Reviewers click buttons in Slack, triggering webhook calls that capture decisions and moderator information.

- Action Execution → System executes final moderation decisions, updates original Slack messages with completion status, and handles escalations to senior review channels.

The six main processing steps are:

- Content Submit API: Receives text and/or image URL submissions, validates input, generates unique submission IDs

- Content Analyzer: Uses OpenAI Moderation API for text and GPT-4o-mini Vision for images, stores original data in state

- Content Router: Routes based on confidence thresholds - auto-decisions for extreme scores (≤5% or ≥95%), human review for uncertain content (5-95%)

- Slack Notifier: Sends interactive messages to risk-based channels, displays actual content and metadata with action buttons

- Slack Webhook: Handles button interactions, processes approve/reject/escalate decisions (signature verification bypassed in demo)

- Action Executor: Executes final moderation actions, updates Slack messages, maintains complete audit trail

Each step only runs when needed. Clean content (≤5% risk) gets auto-approved and skips human review entirely. Toxic content (≥95% risk) gets auto-rejected without human intervention. Only uncertain content (5-95% risk) triggers the full human review workflow through Slack.

The API → analyzer → router → human review → action flow means extending functionality just requires adding new steps. If you want custom notification rules, you can easily add a step between routing and Slack, or if you need escalation workflows, insert a step after human decisions.

Prerequisites

To follow along with this tutorial, you should have:

- Node.js 18+ - Download the latest LTS version from nodejs.org.

- OpenAI API key - Sign up at platform.openai.com and create an API key. Ensure you have access to GPT-4 models for image analysis.

- Slack workspace with admin access - You will need to create a Slack app, configure webhooks, and set up bot permissions. If you don't have admin access, create a test workspace. Your Slack app will also need

chat:write,chat:write.public, and commands scopes. - ngrok or similar tunneling tool - Required to expose your local webhook endpoint to Slack during development. Install from ngrok.com.

- Three Slack channels for content routing:

#content-moderationfor normal priority reviews#content-urgentfor high-risk content#content-escalatedfor escalated decisions

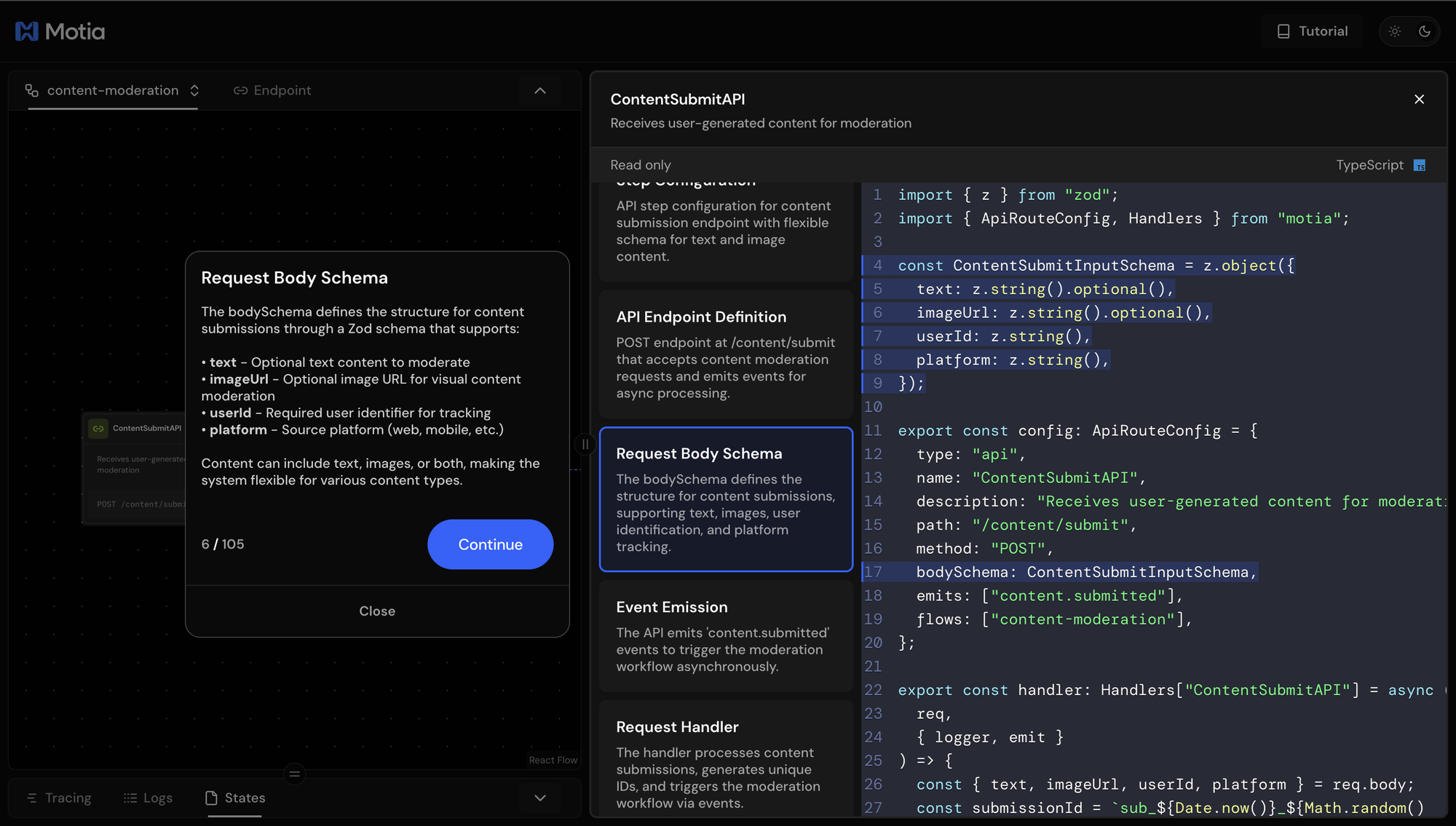

Interactive Tutorial

Before diving into the technical implementation, you can experience the complete workflow hands-on with Motia's built-in interactive tutorial system. Get started by clicking on the "Tutorial" icon in the top-right.

Get Started in 2 Minutes

Clone the complete working example using the command below:

git clone https://github.com/MotiaDev/motia-examples.git

cd examples/ai-content-moderation

npm install

npm run dev

Once your development server is running:

- Open the Motia Workbench in your browser

- Click the Tutorial button in the top-right corner

- Follow the guided walkthrough that will:

- Walk you through each step of the moderation pipeline

- Show you the actual code with highlighted sections

- Let you test the API endpoints live

- Demonstrate the Slack integration workflow

- Guide you through the observability tools (tracing, logs, state)

This interactive tutorial provides a hands-on understanding of how AI analysis, confidence-based routing, and Slack integration work together. Throughout this guide, we'll reference specific tutorial steps to show you exactly where to find features and code sections in the Workbench.

Configuration Setup

Environment Variables

Create a .env file in the project root with your API credentials:

OPENAI_API_KEY=your_openai_api_key_here

SLACK_BOT_TOKEN=xoxb-your-slack-bot-token

SLACK_SIGNING_SECRET=your_slack_signing_secret

SLACK_CHANNEL_MODERATION=#content-moderation

SLACK_CHANNEL_URGENT=#content-urgent

SLACK_CHANNEL_ESCALATED=#content-escalated

Expose Webhook Endpoint

Since Slack needs to send webhook requests to your local development server, use ngrok to create a public URL. Run this command in your terminal to expose port 3000:

ngrok http 3000

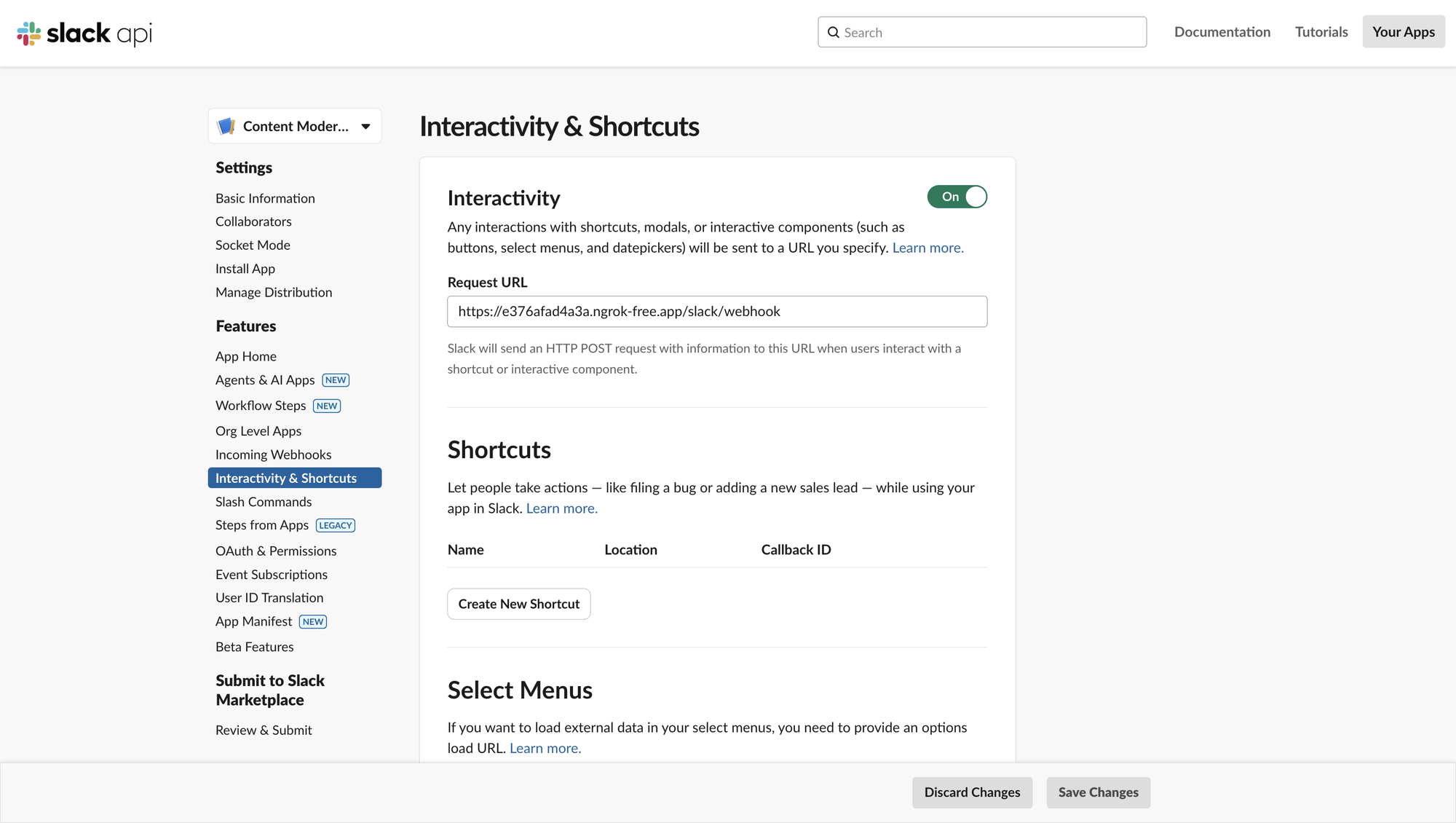

Copy the HTTPS URL (e.g., https://d3a651396019.ngrok-free.app) and configure it in your Slack app's Interactive Components settings as: https://your-ngrok-url.ngrok-free.app/slack/webhook

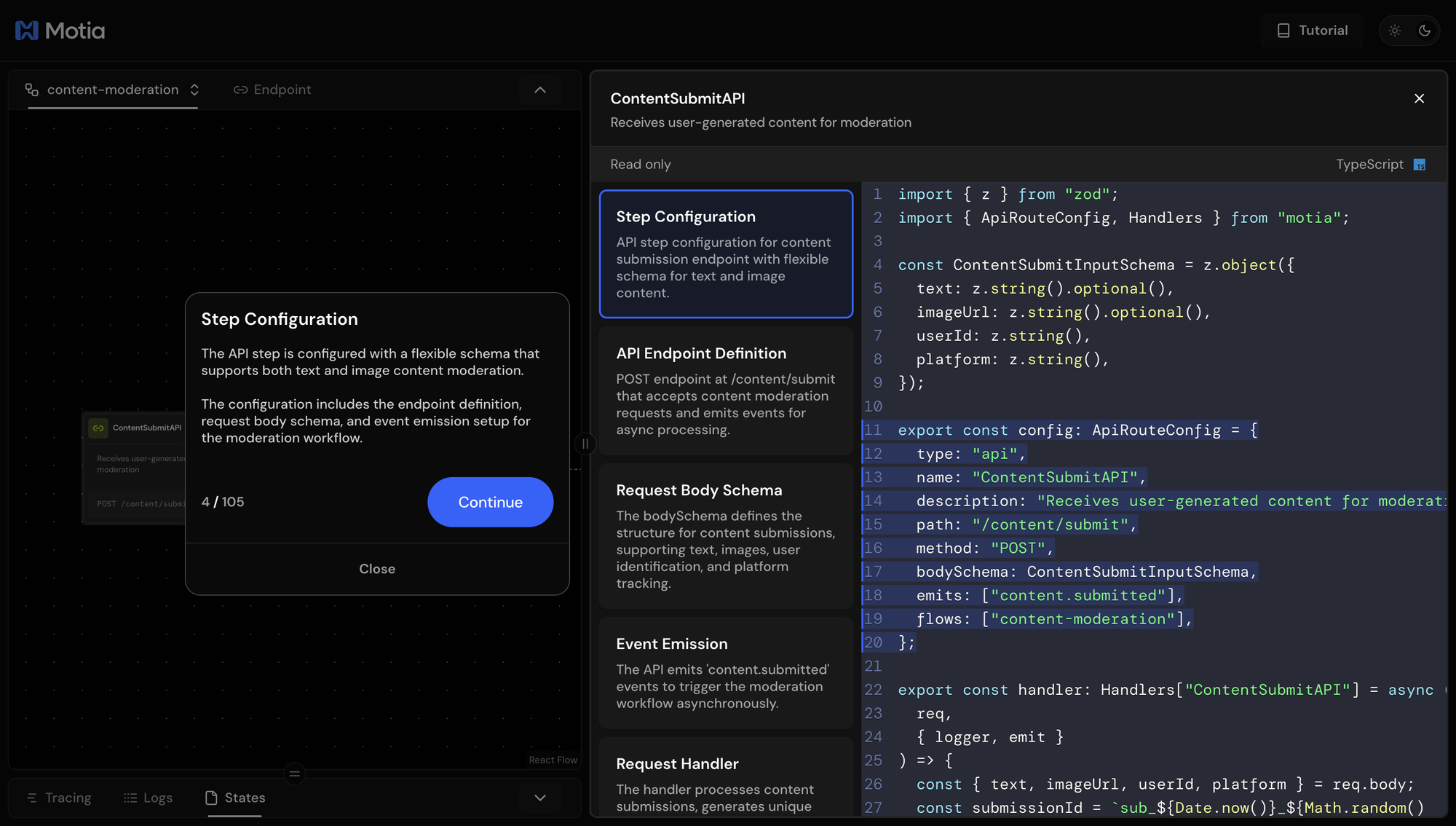

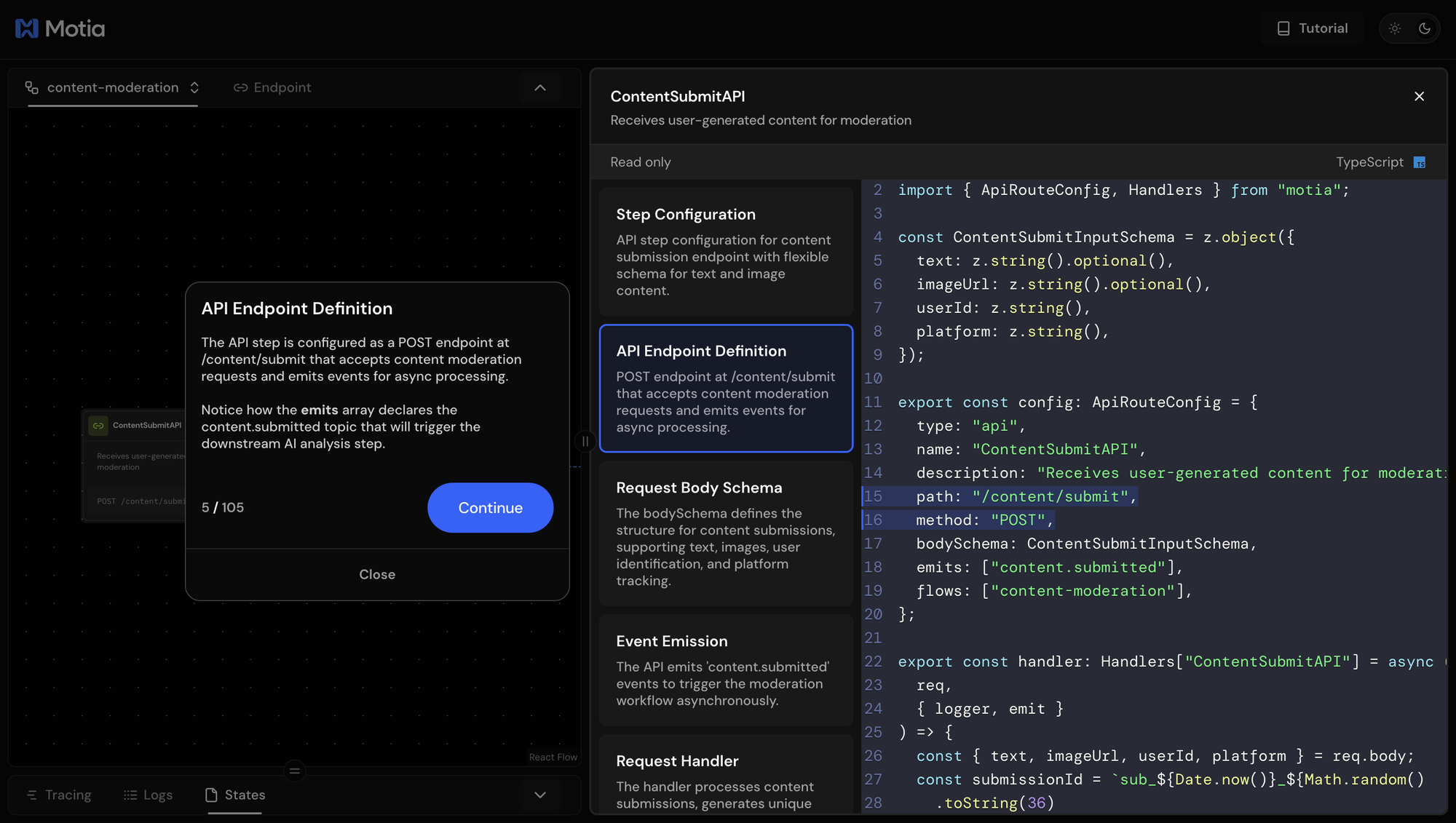

The First Step

The first step creates an HTTP endpoint that receives user-generated content and starts the moderation workflow.

What This Step Does

The API accepts text content, image URL, or both, validates the input, and emits a content.submitted event to trigger analysis. It generates unique submission IDs for tracking each piece of content through the workflow.

The configuration defines this as an API endpoint that accepts POST requests and emits events when content is submitted.

Input Validation

The step uses Zod schemas to validate that submissions contain either text, an image URL, or both:

This ensures every submission has content to analyze while allowing flexibility in content types. If you prefer a different validation framework like Joi or Yup, you can easily swap out Zod.

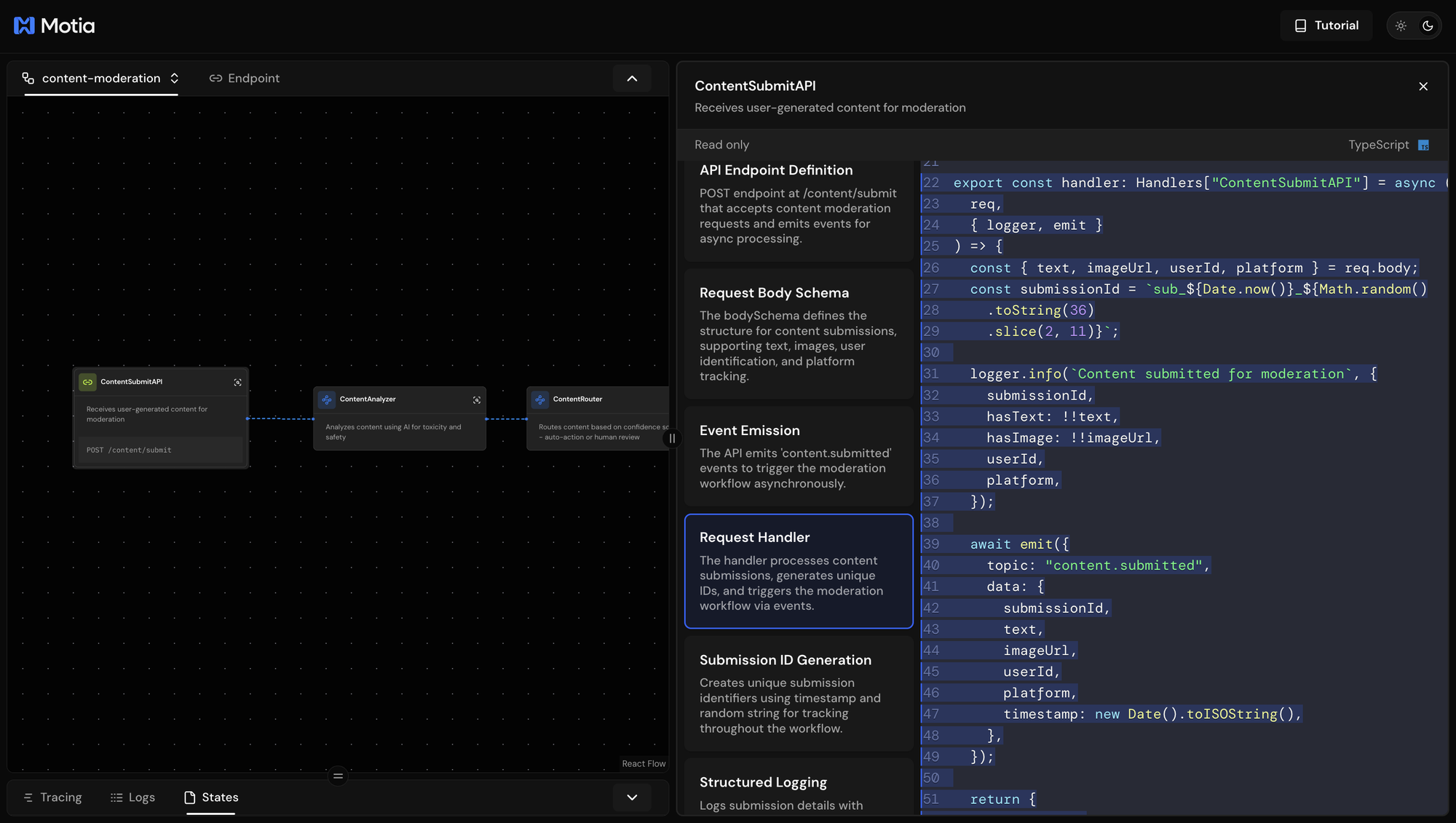

The Handler Function

The handler processes incoming requests and manages the workflow It extracts the validated content from the request, generates a unique submission ID using a timestamp and random characters, logs the submission details for monitoring, and then emits the content.submitted event with all the necessary data for the next step. This pattern keeps each step focused on its specific responsibility while passing data seamlessly through the workflow.

Testing the API

In the Workbench, navigate to the Endpoints tab and test with:

{

"text": "This is a test comment for moderation",

"userId": "user123",

"platform": "web"

}

What Happens Next

When content is submitted, the handler emits a content.submitted event containing the submission data. This event automatically triggers the next step in the workflow: the AI content analyzer.

The beauty of Motia's event system is that steps don't need to know about each other. The API step just emits an event when content arrives. The analyzer step subscribes to that event and processes the content automatically.

This decoupled approach makes it easy to modify individual steps or add new functionality without changing the entire system.

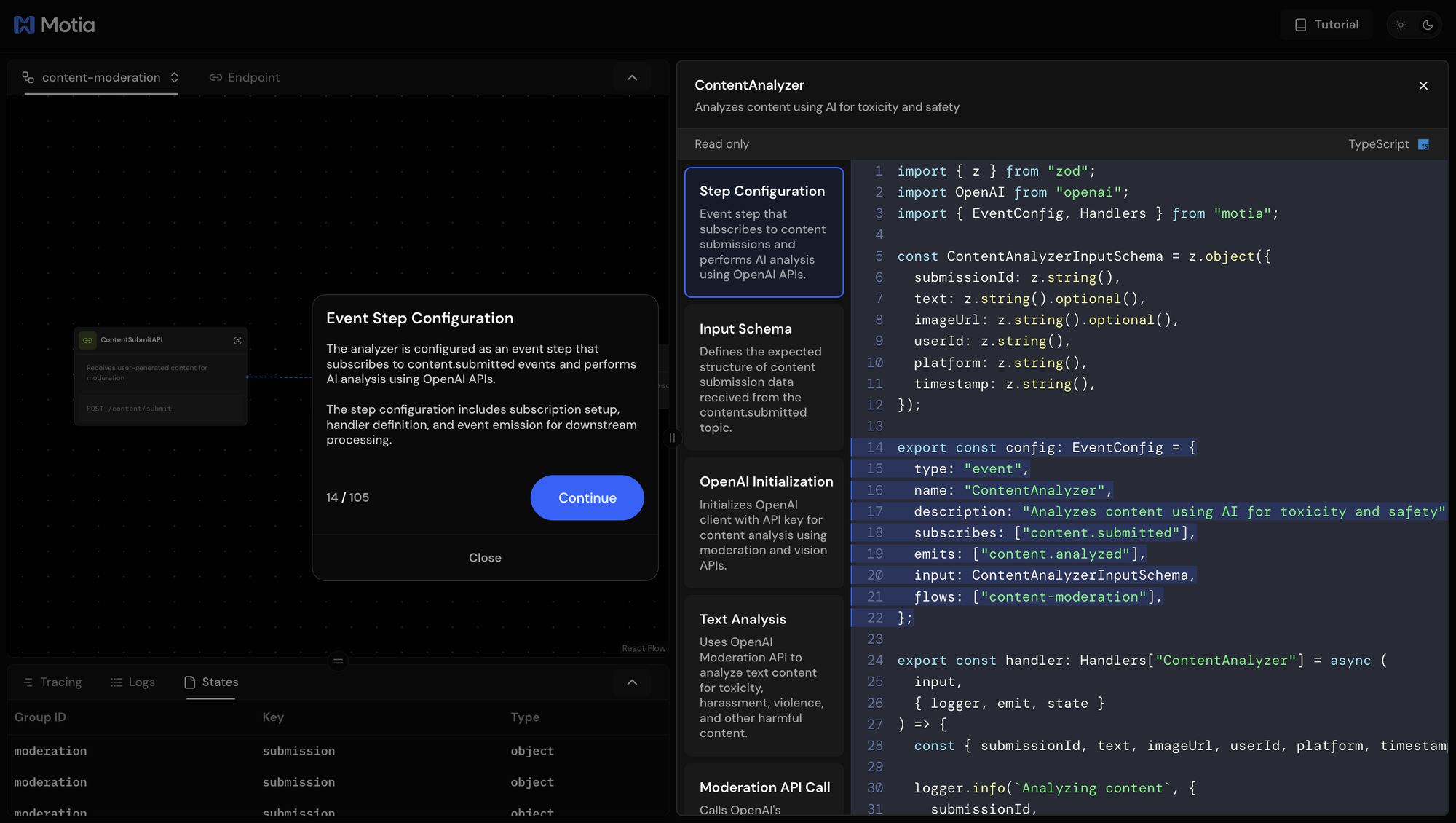

The Second Step: AI Content Analysis

The second step analyzes submitted content using OpenAI's APIs to determine toxicity levels and safety scores. This is where the system decides if content needs human review.

What This Step Does

The analyzer subscribes to content.submitted events and processes both text and images. For text, it uses OpenAI's Moderation API to detect toxicity, harassment, and policy violations. For images, it uses GPT-4o-mini with vision capabilities to identify unsafe visual content.

This step listens for content submissions and emits analysis results. Unlike the API step, this is an event-driven step that runs in the background.

Dual Analysis Approach

The handler processes text and images differently but combines the results:

// Text analysis using OpenAI Moderation API

const moderationResponse = await openai.moderations.create({

input: text,

});

// Image analysis using GPT-4o-mini Vision

const visionResponse = await openai.chat.completions.create({

model: 'gpt-4o-mini',

messages: [/* vision prompt */],

response_format: { type: 'json_object' },

});

Text analysis returns categorical toxicity scores, while image analysis uses a structured prompt to evaluate visual safety. The system takes the maximum score between text and images as the overall risk level.

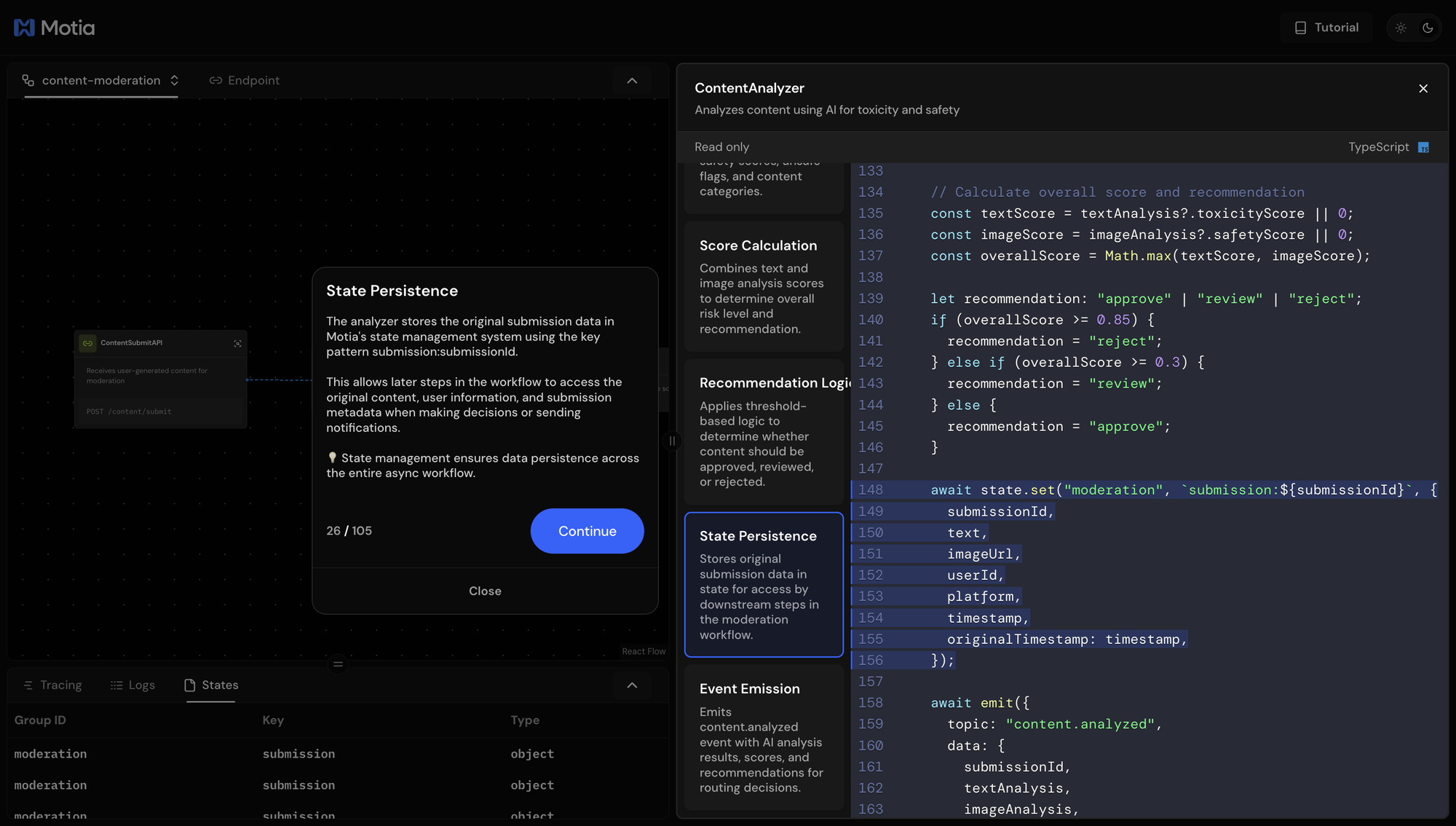

State Management

After the analysis, the step stores the original submission data in Motia's state system:

This allows later steps to access the original content for display in Slack notifications.

The step emits content.analyzed events with confidence scores that the next step uses for routing decisions. Content with clear scores gets auto-processed, while uncertain content continues to human review.

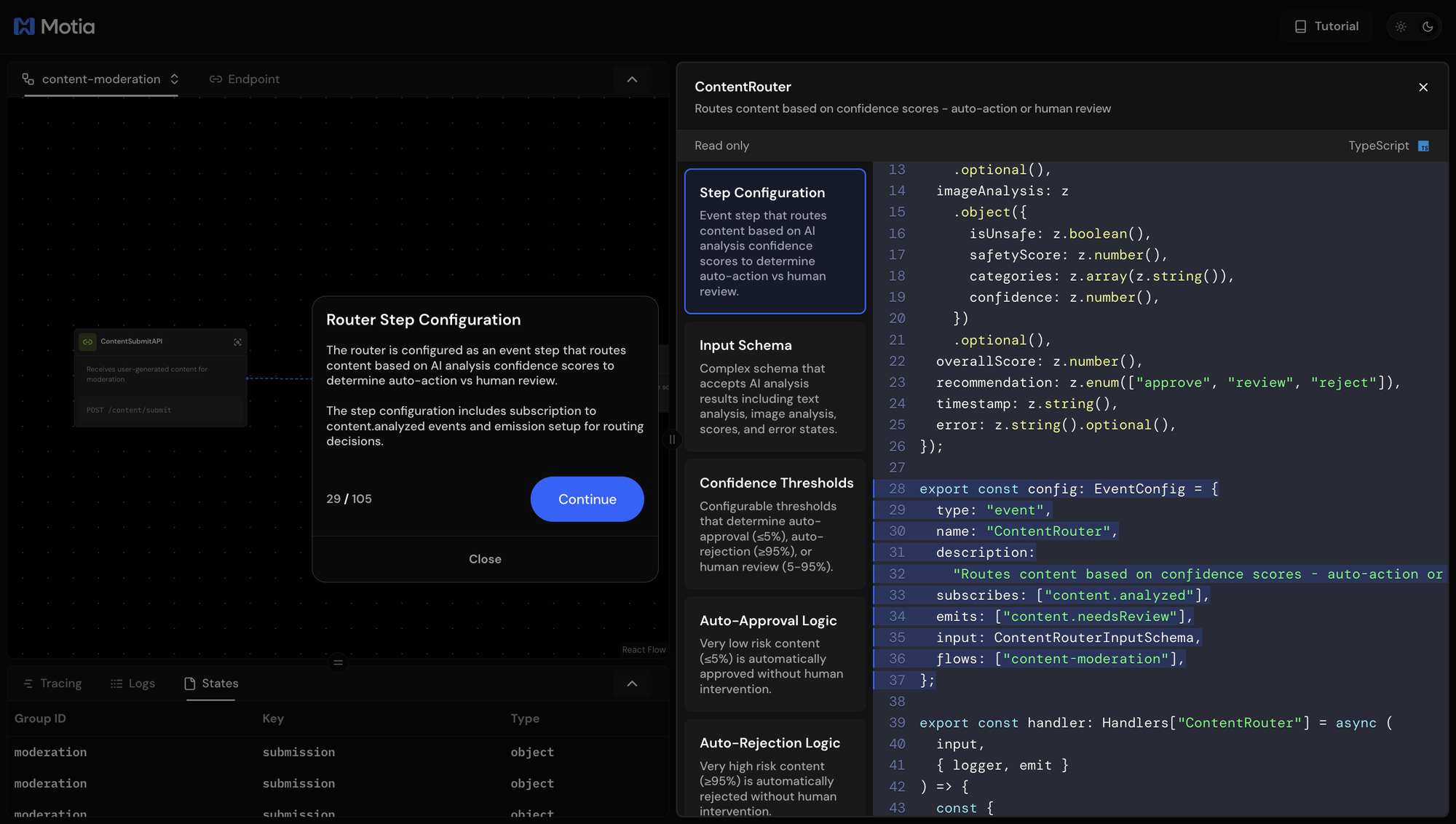

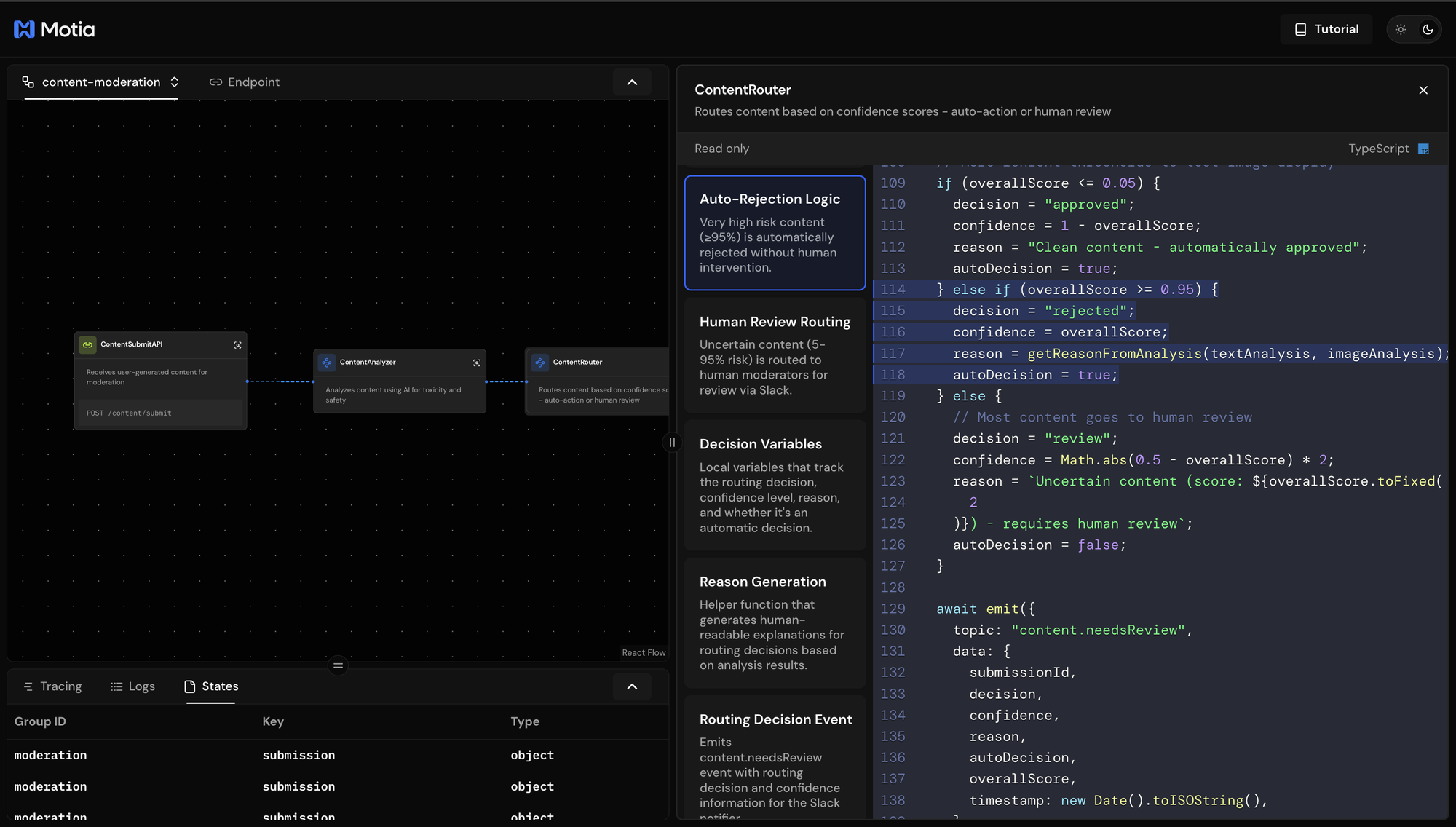

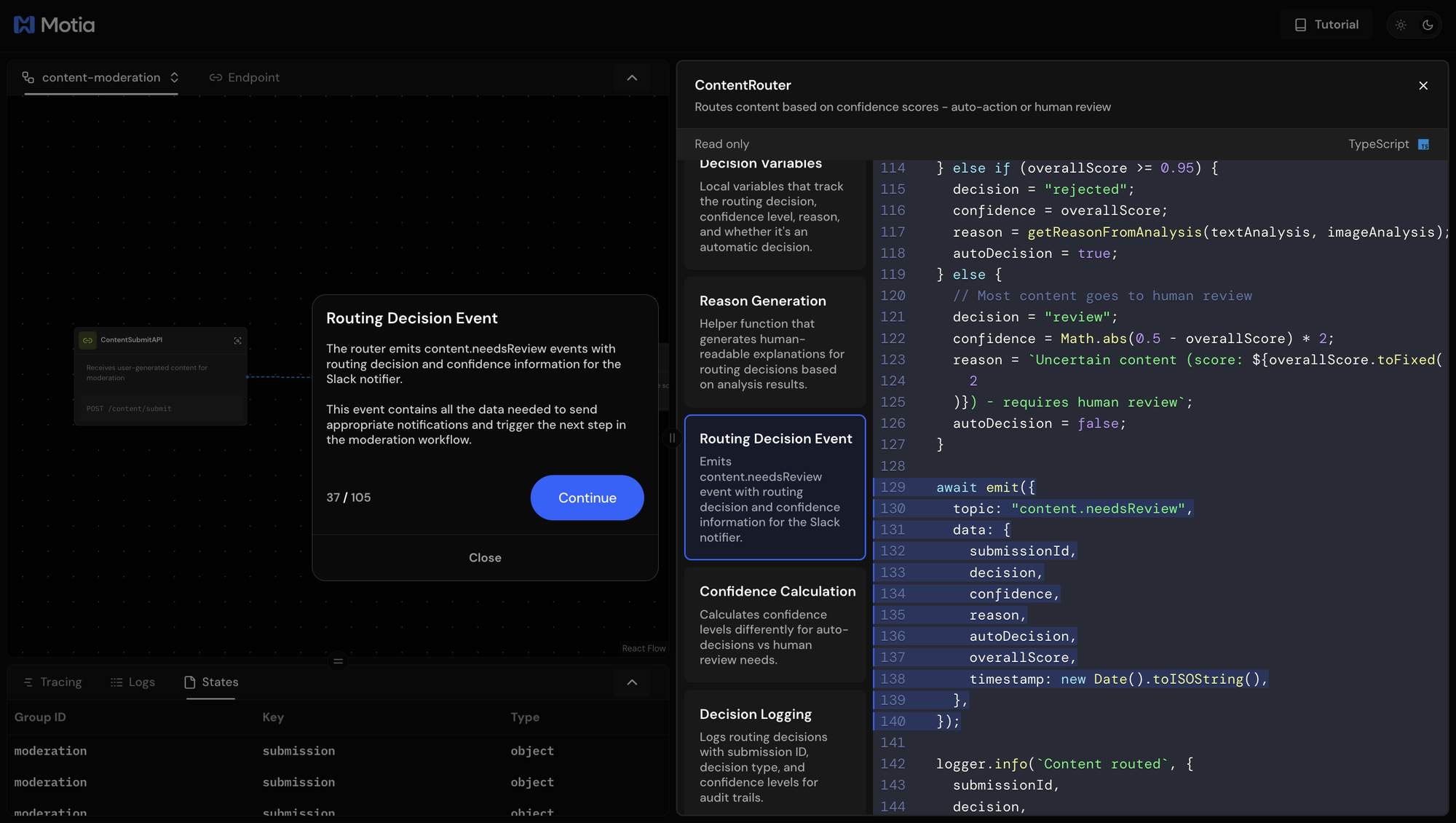

The Third Step: Decision Router

The third step acts as the system's traffic controller, deciding whether content gets auto-processed or sent to human moderators based on AI confidence scores.

What This Step Does

The router subscribes to content.analyzed events and examines the overall risk scores. Content with very low risk (≤5%) gets auto-approved, very high risk (≥95%) gets auto-rejected, and everything in between goes to human review via Slack.

This step processes analysis results and determines the next action. The routing logic is configurable, you can adjust thresholds based on your platform's risk tolerance.

Confidence-Based Routing Logic

The handler implements a three-tier decision system:

if (overallScore <= 0.05) {

decision = 'approved';

autoDecision = true;

} else if (overallScore >= 0.95) {

decision = 'rejected';

autoDecision = true;

} else {

decision = 'review';

autoDecision = false;

}

Auto-decisions skip human review entirely and go straight to execution. Manual reviews get routed to Slack with detailed reasoning and confidence metrics for human moderators.

Monitoring Router Decisions

The Workbench shows routing decisions in real-time. You can see which content gets auto-processed versus sent to humans, along with confidence scores and reasoning. This data helps tune thresholds for optimal balance between automation and accuracy.

The router emits content.needsReview events that trigger either immediate action execution (for auto-decisions) or Slack notifications (for human review).

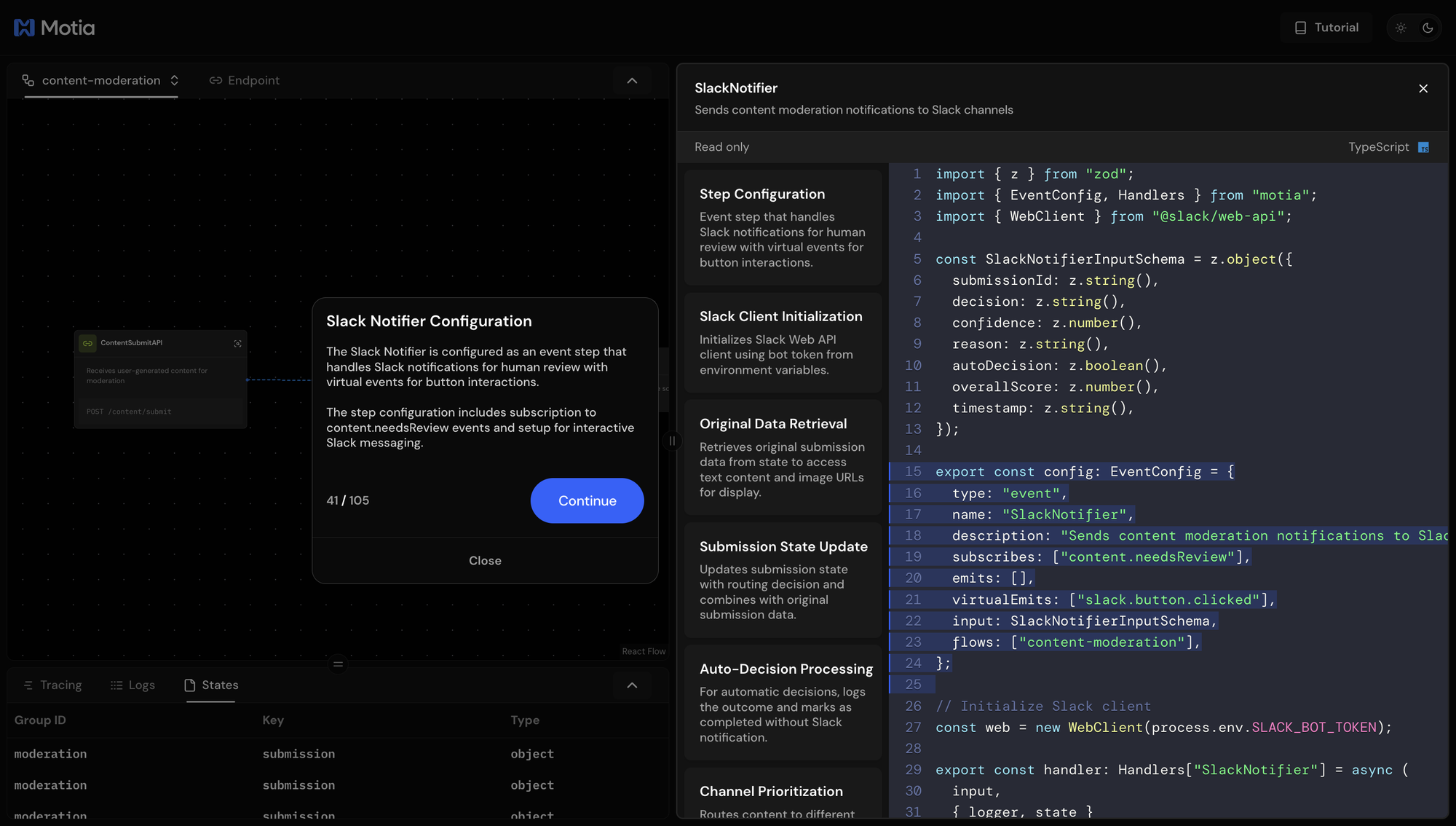

The Fourth Step: Slack Notification

The fourth step creates interactive messages in Slack channels, giving human moderators everything they need to make decisions about uncertain content.

What This Step Does

The notifier subscribes to content.needsReview events and handles two paths: auto-decisions get logged and completed immediately, while human reviews generate rich Slack messages with content previews, risk scores, and interactive buttons.

This step uses virtualEmits to show the connection to the webhook handler in the visual workflow, even though the actual event comes from user button clicks.

Priority-Based Channel Routing

The system routes content to different Slack channels based on risk levels:

function getSlackChannel(overallScore: number): string {

if (overallScore >= 0.7) {

return process.env.SLACK_CHANNEL_URGENT || '#content-urgent';

} else if (overallScore >= 0.5) {

return process.env.SLACK_CHANNEL_ESCALATED || '#content-escalated';

}

return process.env.SLACK_CHANNEL_MODERATION || '#content-moderation';

}

High-risk content goes to urgent channels where senior moderators can respond quickly. Lower-risk content goes to standard moderation channels.

State Coordination

The step retrieves original submission data from state and stores message metadata for the webhook handler:

This creates the connection between Slack messages and workflow state that the webhook handler needs.

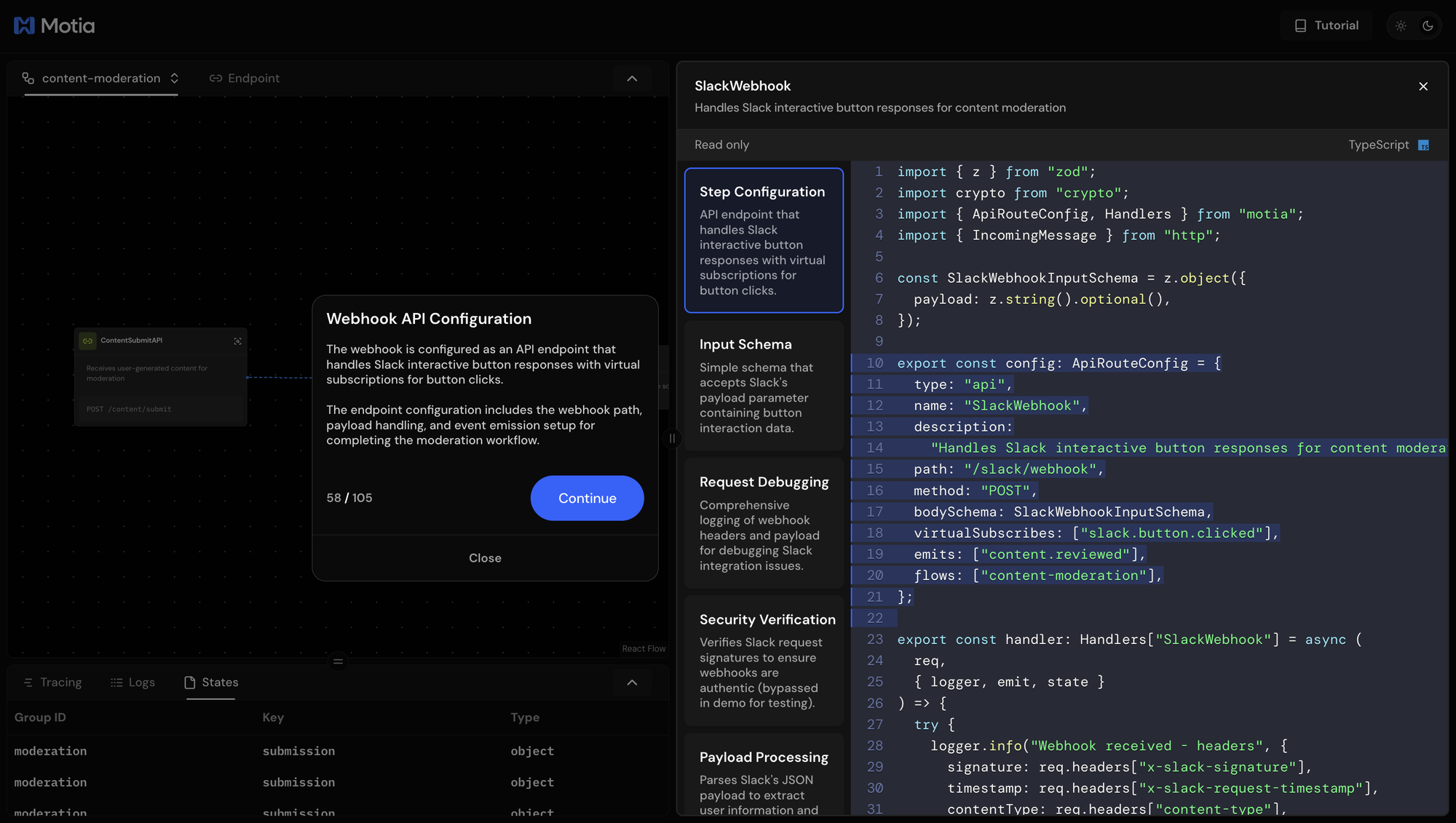

The Fifth Step: Slack Webhook Handler

The fifth step processes button clicks from Slack, handling the human decision-making part of the workflow and converting moderator actions into system events.

What This Step Does

The webhook handler receives POST requests from Slack when moderators click approve, reject, or escalate buttons. It validates the requests, extracts the decision data, updates workflow state, and emits events to trigger final actions.

This API endpoint receives Slack's form-encoded webhook payloads and transforms them into workflow events. The virtualSubscribes shows the logical connection from Slack notifications.

Webhook Payload Processing

Slack sends button interactions as form-encoded data that needs parsing:

if (!req.body.payload) {

return { status: 400, body: { error: 'Missing payload' } };

}

const payload = JSON.parse(req.body.payload);

const { type, user, actions } = payload;

const actionData = JSON.parse(actions[0].value);

const { submissionId, action: moderationAction } = actionData;

The handler extracts the submission ID and moderator's decision (approve/reject/escalate) from the nested JSON structure that Slack provides.

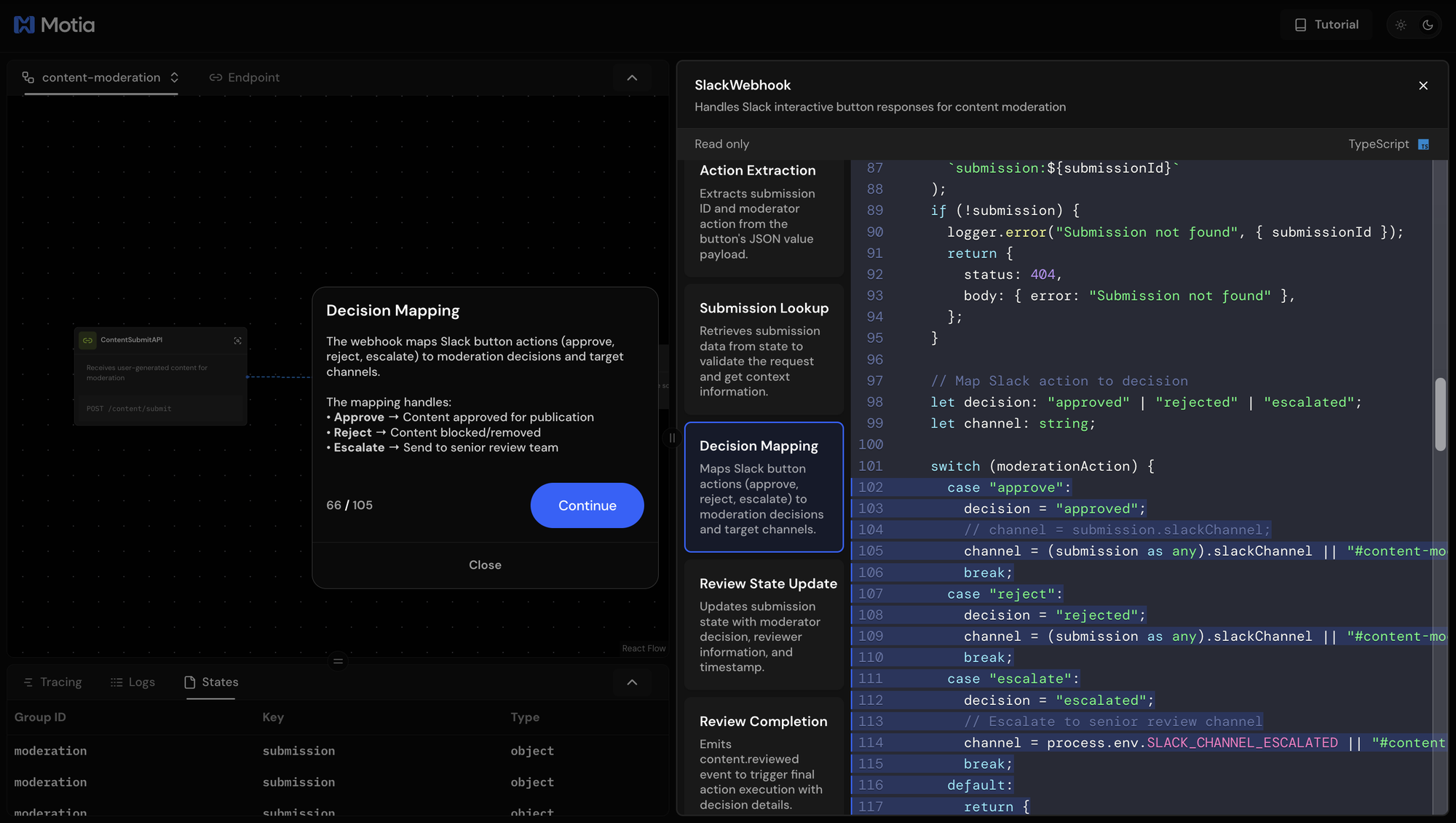

Decision Mapping

Button clicks get mapped to workflow decisions:

switch (moderationAction) {

case 'approve':

decision = 'approved';

break;

case 'reject':

decision = 'rejected';

break;

case 'escalate':

decision = 'escalated';

channel = process.env.SLACK_CHANNEL_ESCALATED;

break;

}

Escalated content gets routed to senior review channels, while approved and rejected content moves to final execution.

State Updates and Event Emission

The handler updates submission state with reviewer information and emits completion events:

await state.set('moderation', `submission:${submissionId}`, {

...submission,

status: 'reviewed',

decision,

reviewedBy: user.username,

reviewedAt: new Date().toISOString(),

});

await emit({

topic: 'content.reviewed',

data: { submissionId, decision, reviewedBy: user.username, /* ... */ },

});

This creates the audit trail showing who made the decision and when, while triggering the final action step.

The Sixth Step: Action Executor

The final step executes moderation decisions and completes the workflow by taking concrete actions on the content and updating all stakeholders about the final outcome.

What This Step Does

The executor subscribes to content.reviewed events and handles the final stage: executing the approved/rejected/escalated decisions, updating Slack messages with completion status, and marking submissions as complete in the workflow state.

This step has no emits since it's the terminal step in the workflow. It completes the moderation process and cleans up state.

Decision Execution Logic

The handler implements different actions based on the final decision:

switch (decision) {

case 'approved':

logger.info('Publishing content', { submissionId, userId, platform });

// TODO: Implement actual content publishing logic

break;

case 'rejected':

logger.info('Blocking content', { submissionId, userId, platform });

// TODO: Implement actual content blocking logic

break;

case 'escalated':

logger.info('Escalating content for senior review', { submissionId });

// TODO: Implement escalation workflow

break;

}

In production, these would connect to your platform's content management APIs to actually publish, block, or escalate the submissions.

Slack Message Updates

The executor updates the original Slack message to show completion status:

await web.chat.update({

channel,

ts: messageTs,

blocks: [

{ type: 'header', text: { text: `✅ Content Review - APPROVED` }},

// Updated fields showing final decision and reviewer

{ type: 'context', elements: [{ text: '✅ Action executed successfully' }]}

],

});

Moderators see their decisions reflected immediately with clear completion indicators and execution timestamps.

Final State Management

The step marks submissions as complete and maintains the audit trail:

await state.set('moderation', `submission:${submissionId}`, {

...submission,

status: 'completed',

finalDecision: decision,

executedAt: new Date().toISOString(),

completedBy: reviewedBy,

});

This creates a complete record of the moderation process from submission to final action.

Testing the Complete System

The tutorial provides two ways to test the content moderation system:

Option 1: Tutorial-Guided Testing

Interactive Tutorial: Follow the tutorial's "Testing Content Submission" section for guided testing. The tutorial will automatically fill in test payloads and submit them for you, then show you the real-time workflow execution.

interactive-tutorial

The tutorial handles the API calls and demonstrates:

- How different content types trigger different routing decisions

- Real-time workflow execution across all steps

- Slack integration with actual button interactions

- Complete observability through tracing and state management

Option 2: Manual API Testing

For hands-on experimentation, test the API directly through the Workbench Endpoints section:

// Clean content (should auto-approve)

{

"text": "Great product, thanks for sharing!",

"userId": "user123",

"platform": "web"

}

// Borderline content (triggers human review)

{

"text": "This might be somewhat controversial...",

"userId": "user456",

"platform": "mobile"

}

// Image content

{

"imageUrl": "https://example.com/image.jpg",

"userId": "user789",

"platform": "web"

}

Submit these payloads manually and watch the workflow process your content through the complete moderation pipeline.

Workflow Monitoring

Tutorial Integration: The tutorial's "Workflow Observability" section shows you how to monitor the complete pipeline using Motia's built-in tools.

Whether you use tutorial-guided or manual testing, monitor the workflow through:

- Tracing: See step-by-step execution timing and status

- Logs: Debug issues and monitor performance details

- State: View complete submission audit trails and decision history

This approach clarifies that the tutorial does the heavy lifting for demonstrations, while manual testing lets users experiment with their own content scenarios.

Conclusion

You've built a complete AI content moderation system using 6 connected workflow steps in Motia. The system receives content via API, analyzes it with OpenAI's text and image models, routes decisions based on confidence scores, sends uncertain content to Slack for human review, processes moderator decisions via webhooks, and executes final actions. Auto-decisions bypass human review entirely while uncertain content gets interactive Slack messages with approve/reject buttons.

The event-driven architecture means each step only runs when needed and connects to the next through simple event emissions. Adding features like sentiment analysis or custom notification rules just requires inserting new steps into the workflow chain. The system demonstrates how AI confidence scoring can reduce human moderation workload while maintaining quality control through strategic human oversight for edge cases.

The complete source code is available in our GitHub repository. Thanks for reading till the end. Please Star (⭐️) our repo, while you're here.